A few years ago, AI agents were mostly experimental, exciting demos tucked away in innovation labs or niche pilot programs. Today, enterprise AI agents are stepping out of the sandbox and into the operational core of large organizations. They’re not just handling one-off tasks; they’re running multi-step workflows, collaborating with humans, and making context-aware decisions that used to be reserved for seasoned employees.

From autonomous customer service to supply chain optimization, these agents are transforming how enterprises operate. And for CTOs, the question has shifted dramatically:It’s no longer “Should we explore AI agents?” it’s “How do we scale them without losing control, compliance, or ROI?”

Scaling too fast without the right guardrails can turn a promising initiative into a costly, even risky, liability. This guide breaks down what CTOs need to know before taking enterprise AI agents from successful pilot to enterprise-wide deployment.

Why Enterprise AI Agents Aren’t Just “Smarter Chatbots”

A common trap is to mentally lump enterprise AI agents in with the chatbots of 2017. Yes, both can interact with users. But enterprise AI agents development are far more capable and autonomous:

- Multi-System Integration: Instantly respond to high-intent inbound leads, even at 2 AM.

- Context-Aware Decision-Making: They apply policies, priorities, and historical patterns to make informed choices.

- Collaboration Skills: They work with humans and other AI agents through APIs, messaging platforms, and even voice.

- Self-Learning Loops: They learn from outcomes and continuously improve.

Think of them less like “virtual assistants” and more like digital colleagues, people who understand business rules, compliance standards, and operational nuances.

The Scaling Temptation (and Its Hidden Dangers)

Here’s the scenario: your pilot AI agent cuts customer call handling time by 40%. Executives are thrilled. The natural impulse? Roll it out everywhere immediately.

But scaling enterprise AI agents isn’t just about duplicating a working model. Without the right architecture, governance, and human-in-the-loop safeguards, scaling can introduce:

- Model Drift: Performance degrades when the agent encounters new, noisier data in the wild.

- Integration Fragility: Weak connectors between enterprise systems can cause cascading workflow failures.

- Shadow AI: Teams build unauthorized agents, bypassing security and compliance.

- Runaway Costs: Inference expenses for LLM-powered agents can skyrocket without optimization.

The CTO’s responsibility is to shift from “quick wins” thinking to a maturity framework, a measured, stage-by-stage approach.

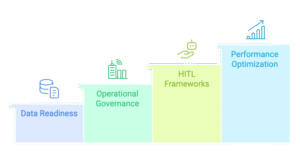

The Four Pillars of Scaling Enterprise AI Agents

Enterprise Data Readiness

An AI agent is only as good as the data it consumes. Before scaling:

- Data Quality: Remove inconsistencies in ERP, CRM, and operational systems. Garbage in equals garbage out at enterprise scale.

- Real-Time Access: Agents need up-to-the-minute data. Stale inputs can cause costly errors.

- Security & Compliance: Ensure HIPAA, GDPR, SOC 2, or other relevant standards are met before agents touch sensitive information.

- Pro Tip: A centralized enterprise data catalog with metadata tagging can help agents locate and trust the right datasets instantly.

Operational Governance

Scaling without governance is like deploying drivers without traffic laws.

- Agent Identity Management: Give each AI agent a unique identity, role-based access, and an audit trail in your IAM system.

- Policy-Driven Boundaries: Define which decisions agents can make autonomously and when to escalate.

- Auditability & Explainability: Store logs, decision trees, and LLM prompts for compliance reviews.

- Case in Point: A global bank built a “decision justification” feature for its fraud detection agents, logging both the outcome and the reasoning path for regulators.

Human-in-the-Loop (HITL) Frameworks

Even top-tier enterprise AI agents will hit cases they can’t confidently resolve. Without a HITL system, these can turn into silent failures.Best practices include:

- Confidence Thresholds: Escalate to a human if confidence drops below a set level (e.g., 85%).

- Feedback Loops: Use human interventions as training data for the agent’s next iteration.

- Role-Based Routing: Send escalations to the right subject matter expert to prevent bottlenecks.

- Pro Tip: Build HITL tools into the existing interfaces employees already use, so collaboration feels natural.

Performance & Cost Optimization

If an agent costs more to run than the value it delivers, scaling is a failure.CTOs should monitor:

- Latency: Keep response times low as workloads grow.

- Inference Cost per Transaction: Watch token usage in LLM-based agents and route simple queries to cheaper models.

- Utilization Metrics: Ensure agents handle high-value tasks rather than low-impact busywork.

- Example: An e-commerce leader reduced inference costs by 37% by implementing a triage layer that sent simple questions to lightweight models.

Scaling Strategy: From Pilot to Full Deployment

Instead of a “big bang” rollout, move through these four maturity stages:

Contact us

Contact us