How Hadoop Helps Solve the Big Data Problem?

Initially designed in 2006, Hadoop is an amazing software particularly adapted for managing and analysis big data in structured and unstructured forms. The creators of Hadoop developed an open source technology based on input, which included technical papers that were written by Google. The initial design of Hadoop has undergone several modifications to become the go-to data management tool it is today. The hadoop helps in solving different big data problem efficiently. Let’s find out it how.

Also Read: 10 Big Data Trends to Watch in 2019

As organizations began to use the tool, they also contributed to its development. The first organization that applied this tool is Yahoo.com; other organizations within the Internet space followed suit shortly. The other organizations that applied Hadoop in their operations include Facebook, Twitter, and LinkedIn, all of which contributed to the development of the tool.

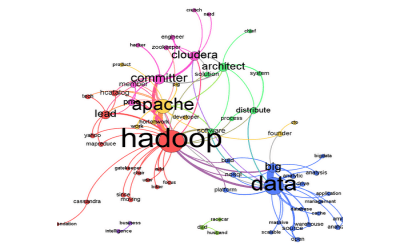

Today, Hadoop is a framework that comprises tools and components offered by a range of vendors. The wide variety of tools and compartments that make up Hadoop are based on the expansion of the basic framework.

How Hadoop handles big data

As more organizations began to apply Hadoop and contribute to its development, word spread about the efficiency of this tool that can manage raw data efficiently and cost-effectively. The fact that Hadoop was able to carry out what seemed to be an imaginary task; its popularity grew widely.

The open source nature of Hadoop allows it to run on multiple servers. As the quality of the tool improved over time, it became able to perform robust analytical data management and analysis tasks. The ability of Hadoop to analyze data from a variety of sources is particularly notable.

Certain core components are behind the ability of Hadoop to capture as well as manage and process data. These core components are surrounded by frameworks that ensure the efficiency of the core components. The core components of Hadoop include the Hadoop Distributed File System (HDFS), YARN, MapReduce, Hadoop Common, and Hadoop Ozone and Hadoop Submarine. These components influence the activities of Hadoop tools as necessary.

The Hadoop Distributed File System, like the name suggests, is the component that is responsible for the basic distribution of data across the system of storage, which is a DataNode. This component is behind the directory of file storage as well as the file system that directs the storage of data within nodes.

Applications run concurrently on the Hadoop framework; the YARN component is in charge of ensuring that resources are appropriately distributed to running applications. This component of the Hadoop framework is also responsible for creating the schedule of jobs that run concurrently.

The MapReduce component of Hadoop tools directs the order of batch applications. This component is in charge of the parallel execution of batch applications. The Hadoop Common component of Hadoop tools serves as a resource that is utilized by the other components of the framework. Hadoop Ozone is a component that provides the technology that drives object store, while Hadoop Submarine is the component that drives machine learning. Hadoop Submarine and Hadoop Ozone are some of the newest technologies that are components of Hadoop.

Thus, Hadoop processes a large volume of data with its different components performing their roles within an environment that provides the supporting structure. Apart from the components mentioned above, one also has access to certain other tools as part of their Hadoop stack. These tools include the database management system, Apache HBase, and tools for data management and application execution. Development, management, and execution tools could also be part of a Hadoop stack. The tools typically applied by an organization on the Hadoop framework are dependent on the needs of the organization.

How does Hadoop process large volumes ofdata

Hadoop is built to collect and analyze data from a wide variety of sources. It is also designed to collect and analyze data from a variety of sources because of its basic features; these basic features include the fact that the framework is run on multiple nodes which accommodate the volume of the data received and processed.

Tools based on the Hadoop framework run on a cluster of machines which allows them to expand to accommodate the required volume of data. Instead of a single storage unit on a single device, with Hadoop, there are multiple storage units across multiple devices.

Hadoop-based tools are also able to process and store a large volume of data because of the ability of the nodes, which are the storage units to scale horizontally, creating more room and resources as necessary. Thus, within a cluster, there is room to scale horizontally, and there are multiple clusters responsible for the operation of Hadoop tools.

The components and tools of Hadoop allow the storage and management of big data because of the ability of these components to carry out specific purposes and the core operational nature of Hadoop across clusters.

Why Hadoop is used in big data

Before Hadoop, the storage and analysis of structured as well as unstructured data were unachievable tasks. Hadoop made these tasks possible, as mentioned above, because of its core and supporting components.

Certain features of Hadoop made it particularly attractive for the processing and storage of big data. The features that made more organizations subscribe to utilizing Hadoop for processing and storing data include its core ability to accept and manage data in its raw form.

Sources of data abound, and organizations strive to make the most of the available data. To make the most of available pool of data, organizations require tools that can collect and process raw data in the shortest time possible, a strong point of Hadoop.

The application of Hadoop in big data is also based on the fact that Hadoop tools are highly efficient at collecting and processing a large pool of data. Tools that are based on the Hadoop framework are also known to be cost-effective measures of storing and processing a large pool of data. Research has shown that organizations can save significantly by applying Hadoop tools. Storage of data that could cost up to $50,000 only cost a few thousand with Hadoop tools.

Hadoop is a cost-effective option because of its open source nature. Vendors are allowed to tap from a common pool and improve their area of interest. The Hadoop framework itself is free. Organizations only purchase subscriptions for the add-ons they require which have been developed by vendors.

All vendors of Hadoop add-ons are members of the community, and they develop the community with the products which they offer. As organizations find products that are tailored to their data storage, management, and analysis needs, they subscribe to such products and utilize the products as add-ons of the basic Hadoop framework.

Hadoop is controlled by Apache Software Foundation rather than a vendor or group of vendors. Thus, every vendor and interested partieshave access to Hadoop. Vendors focus on modifying Hadoop by tweaking the functionalities to serve extra purposes. Other software could also be offered in addition to Hadoop as a bundle. Organizations can subscribe to these bundles. Vendors could also build develop specific bundles for organizations. Irrespective of the approach of the development of the version of Hadoop which an organization uses, the cost is known to be significantly lower than other available options because access to the basic structure is free. Simply put, vendors are at the liberty of developing the version of Hadoop they wish and making it available to users at a fee.

The flexibility of use of Hadoop is another reason why it is increasingly becoming the go-to option for the storage, management, and analysis of big data. With Hadoop, any desired form of data, irrespective of its structure can be stored. Organizations typically limit themselves to collecting only certain forms of data. This limitation is eliminated with Hadoop because of the low cost of collecting and processing the needed form of data. Thus, the fact that Hadoop allows the collection of different forms of data drives its application for the storage and management of big data.

Organizations became attracted to the science of big data because of the insights that could be gotten from the storage and analysis of a large volume of data. Hadoop gives organizations more room to gather and analyze data to gain maximum insights as regards market trends and consumer behaviors.

Available access to historical data is another reason for the widespread application of Hadoop. Before Hadoop, the available forms of storing and analyzing data limited the scope as well as the period of storage of data. Thus, specific data were kept for the longest periods possible which, in some cases, arethree months.

Hadoop provides fuller insights because of the longevity of data storage. The longevity of data storage with Hadoop also reflects its cost-effectiveness. Instead of storing data for short periods, data could be stored for the longest periods with the liberty of analyzing stored data as necessary. Hadoop provides historical data, and history is critical to big data.

Components of Hadoop allow for full analysis of a large volume of data. The core component of Hadoop that drives the full analysis of collected data is the MapReduce component. This component is also compatible with other tools that are applied for data analysis in certain settings. MapReduce, for example, is known to support programming languages such as Ruby, Java, and Python.

Big data processing using Hadoop

Currently, there are two major vendors of Hadoop. These vendors include Cloudera, which was formed as a merger between two rivals in late 2018 and MapR. Although there are numerous other vendors in the Hadoop space, these two organizations are bound to drive a lot of the changes that would happen in the nearest future as regards Hadoop.

Vendors generally develop Hadoop distributions which could be add-ons of the basic framework or dedicated portions of the framework for specific purposes. Currently, Cloudera promises to deliver a data cloud which will be the first of its kind in the Hadoop space.

In making use of tools developed by vendors, organizations are tasked with understanding the basics of these tools as well as how the functionality of the tool applies to their big data need. Big data processing using Hadoop requires tools developed by vendors for achieving specific purposes. The specificity of the tool to the need of an organization would determine the effectiveness of Hadoop for such organizations.

Since Hadoop is based on the integration of tools and components over a basic framework, these tools and components should be properly aligned towards maximum efficiency. It is the duty of the vendor to create a system that is most appropriate to the needs of a specific client by aligning the necessary tools and components into Hadoop distributions.

Applications of Hadoop in big data

Organizations, especially those that generate a lot of data rely on Hadoop and similar platforms for the storage and analysis of data. These organizations include Facebook. Facebook generates an enormous volume of data and has been found to apply Hadoop for its operations. The flexibility of Hadoop allows it to function in multiple areas of Facebook in different capacities. Facebook data are thus compartmentalized into the different components of Hadoop and the applicable tools. Data such as status updates on Facebook, for example, are stored on the MySQL platform. The Facebook messenger app is known to run on HBase.

Experts have also stated that e-commerce giant, Amazon also utilize components of Hadoop inefficient data processing. The component of Hadoop that is utilized by Amazon include Elastic MapReduce web service. Elastic MapReduce web service is adapted for effectively carrying out data processing operations, which include log analysis, web indexing, data warehousing, financial analysis, scientific simulation, machine learning, and bioinformatics.

Other organizations that apply components of Hadoop include eBay and Adobe. eBay uses Hadoop components such as Java MapReduce, Apache Hive, Apache HBase, and Apache Pig for processes such as research and search optimization.

Adobe is known to apply components of Hadoop such as Apache HBase and Apache Hadoop. The production, as well as development processes of Adobe, applies components of Hadoop on clusters of 30 nodes. There are reports of the expansion of the nodes utilized by Adobe.

Although these are examples of the application of Hadoop on a large scale, vendors have developed tools that allow the application of Hadoop in small scales across different operations.